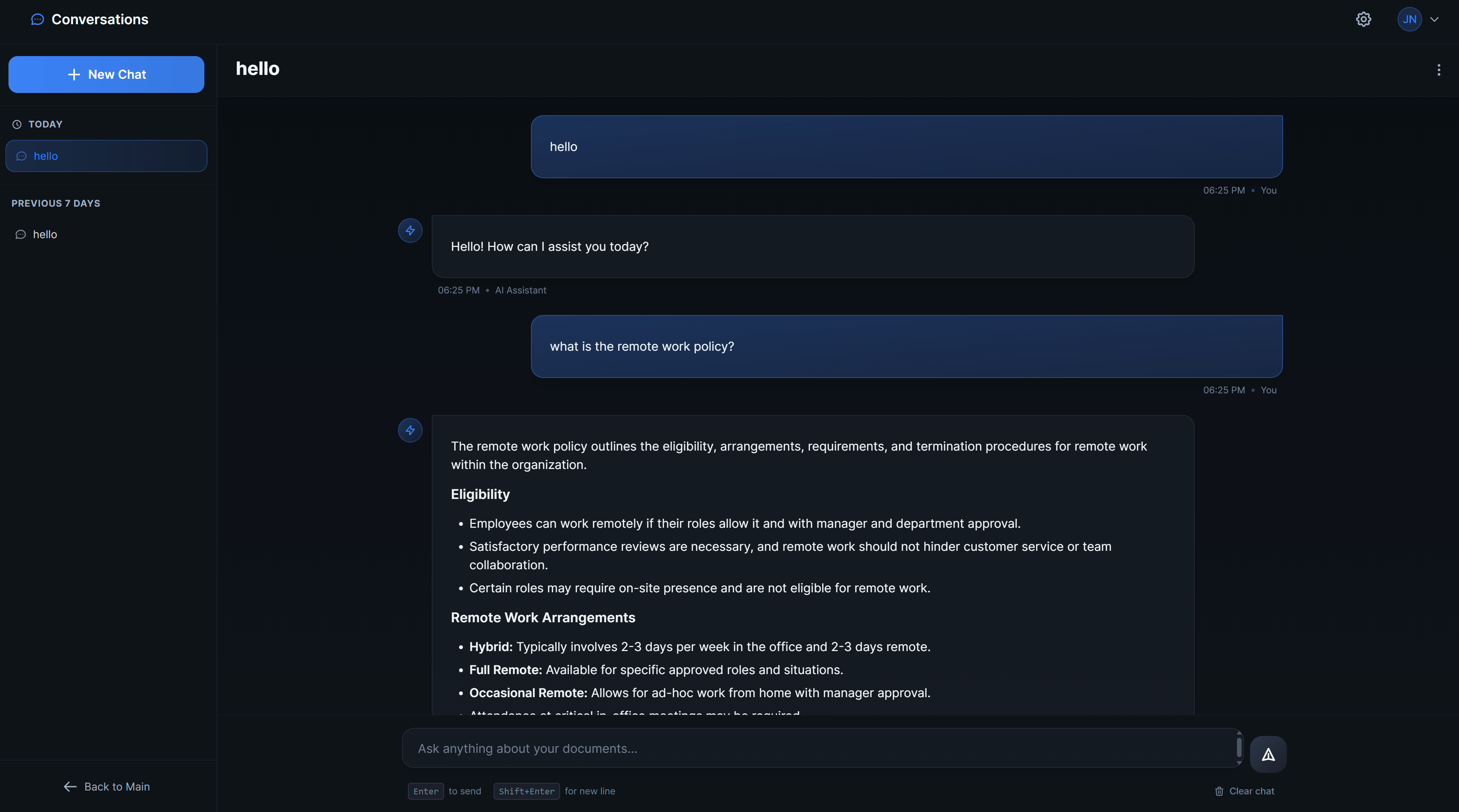

See It In Action

Experience the power of intelligent document conversations

Intelligent Conversations with Your Documents

Get context-aware answers powered by your knowledge base

Natural Conversations

Chat naturally with your documents and get intelligent responses

Enterprise Security

HTTPOnly cookies, E2E encryption, log sanitization & RBAC

Lightning Fast

Semantic caching reduces costs by 80% with instant responses

Context-Aware

Understands your documents and provides relevant answers

Multiple LLMs

Support for OpenAI, Gemini, HuggingFace, and local models

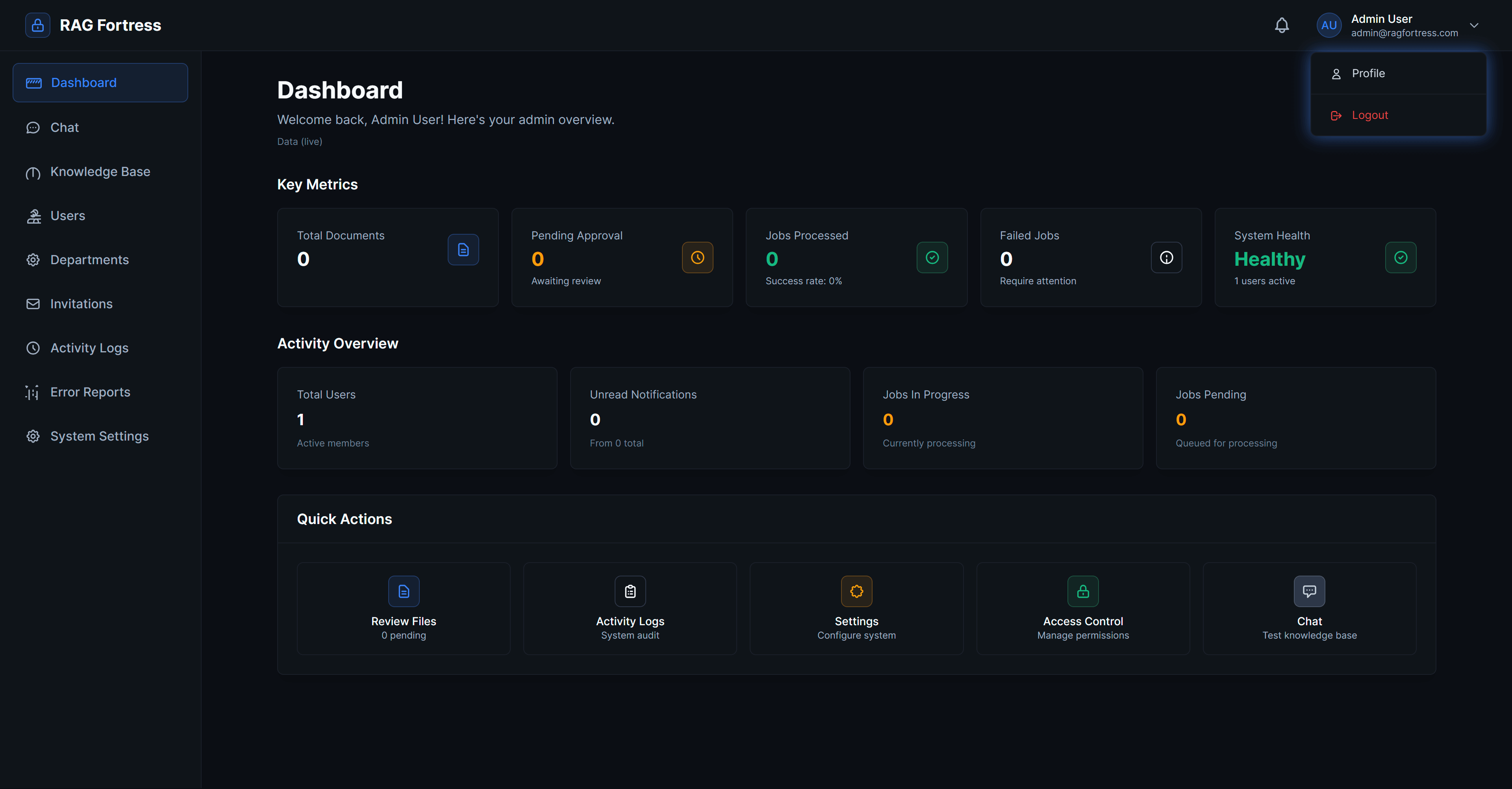

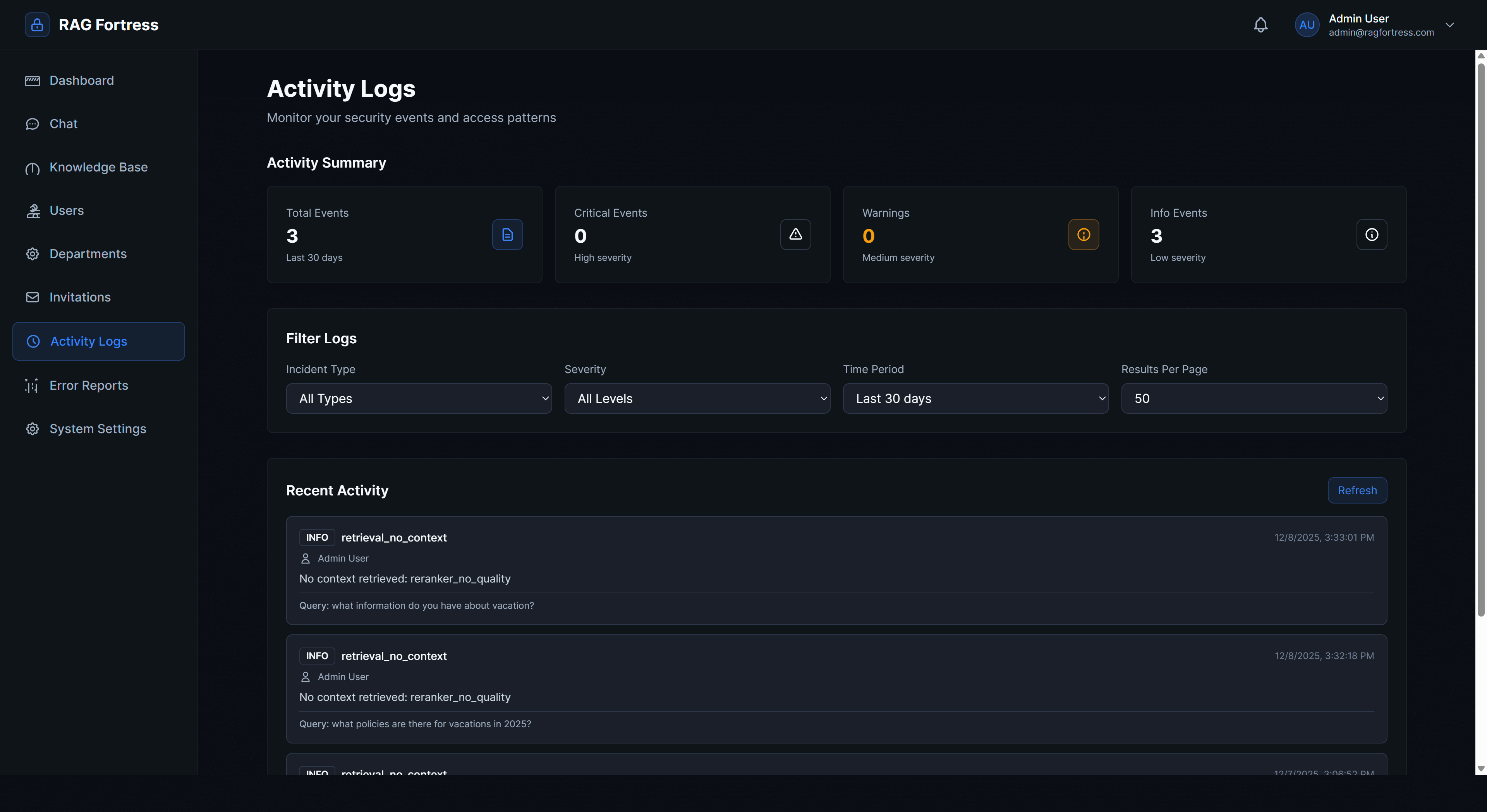

Activity Tracking

Complete audit trails and analytics dashboard

Complete Platform Overview

Admin Dashboard

Activity monitoring & analytics

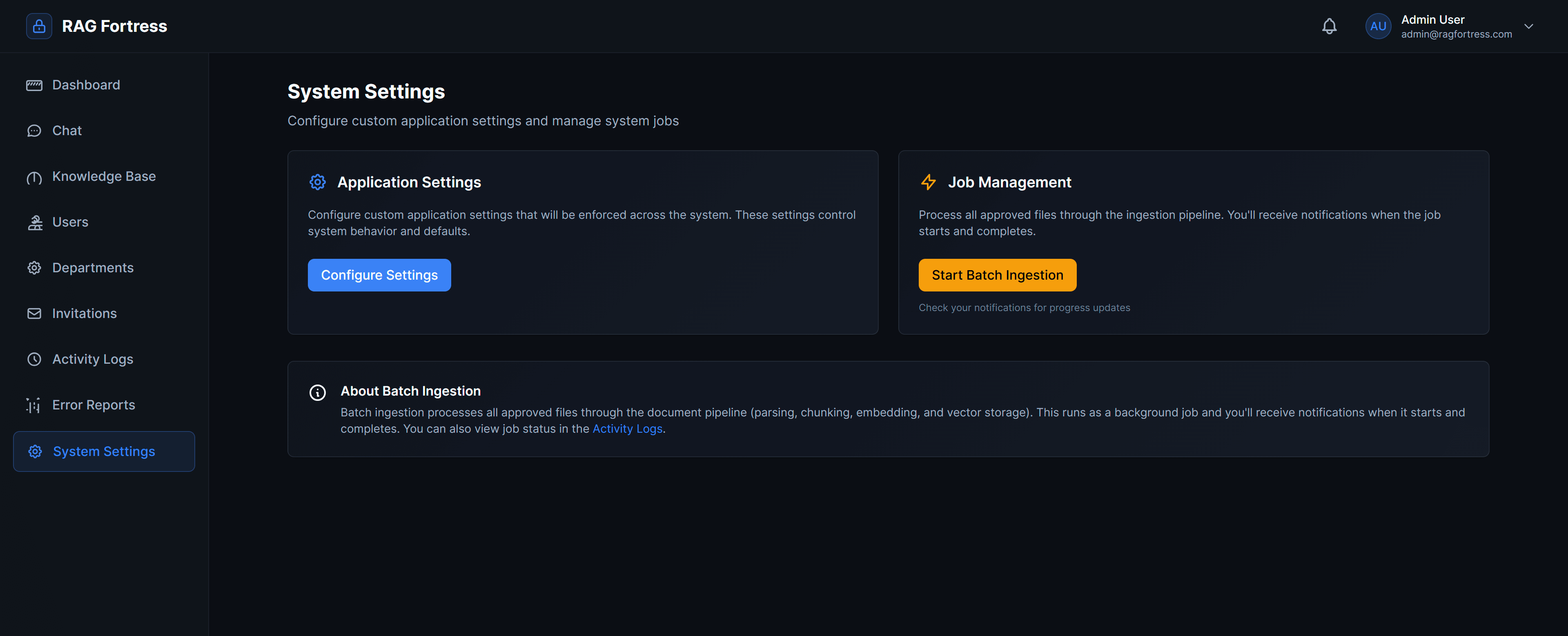

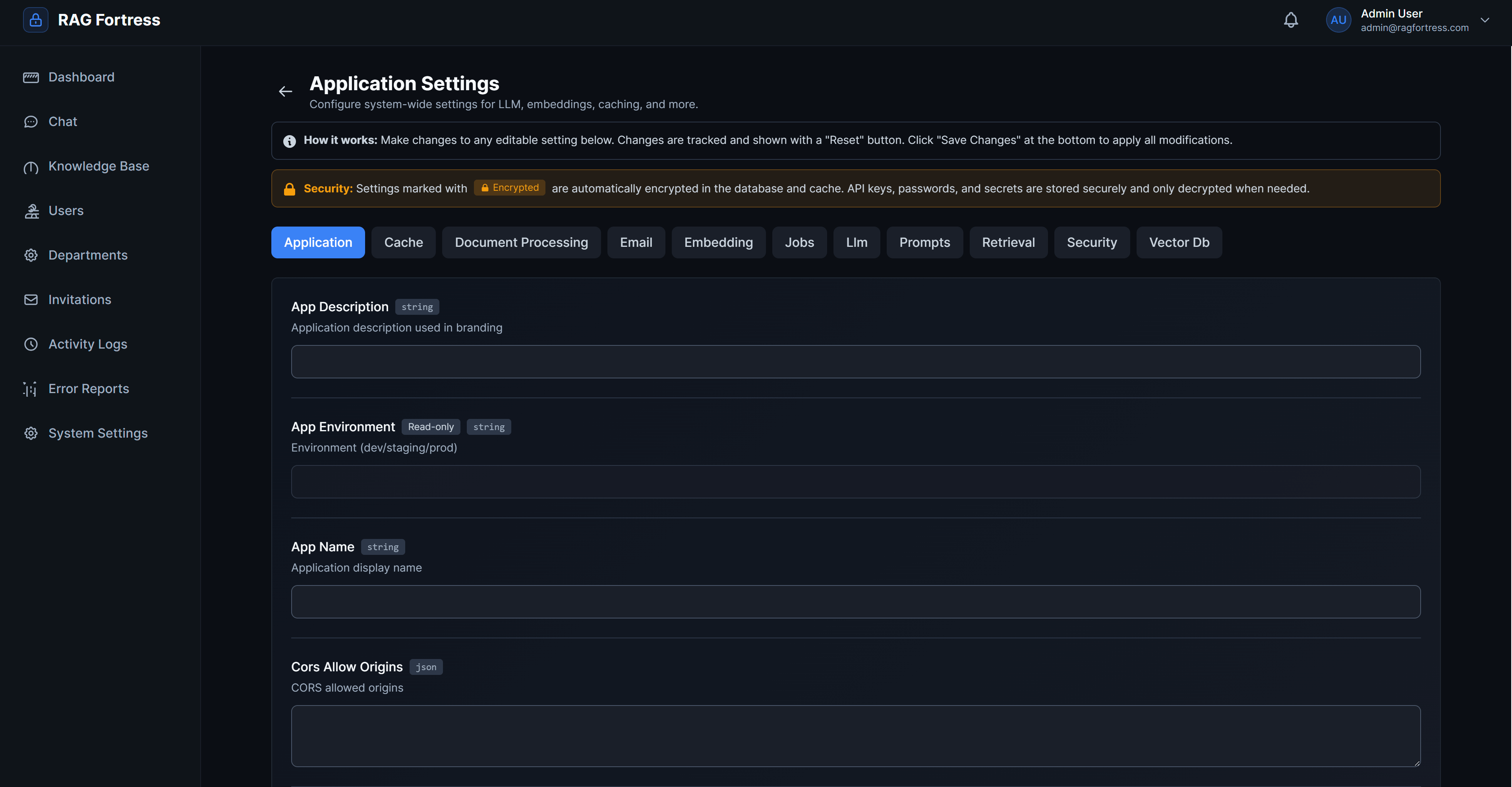

Application Settings

Configure LLMs & vector stores

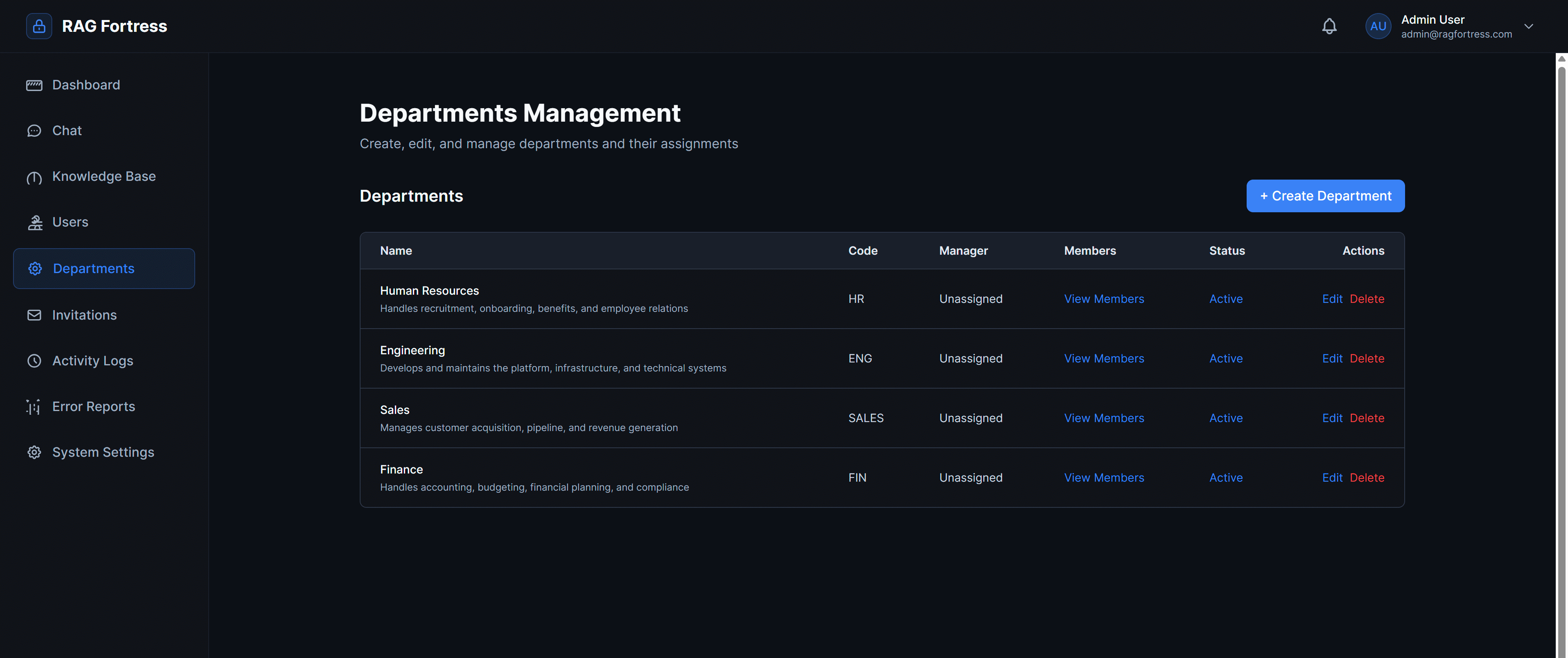

Department Management

Organize teams & access control

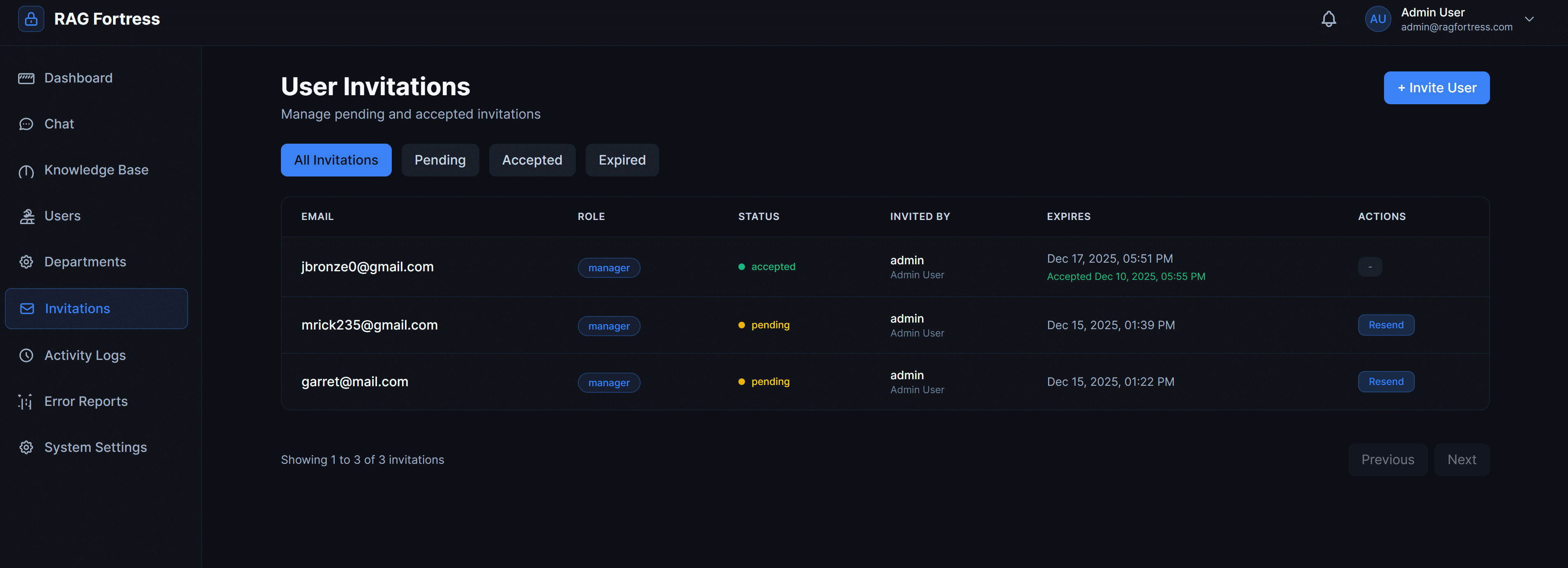

Multi-Tier Invitations

Email-based user onboarding

Activity Logger

Detailed audit logs

Detailed Configuration

Fine-tune every setting